This is a post-mortem investigation of the delays in processing of assets and collections we saw in iconik between January 23-30, 2020. The purpose of this document is to document what happened and when, and what we can do in the future to improve the service to avoid this kind of setback.

Description

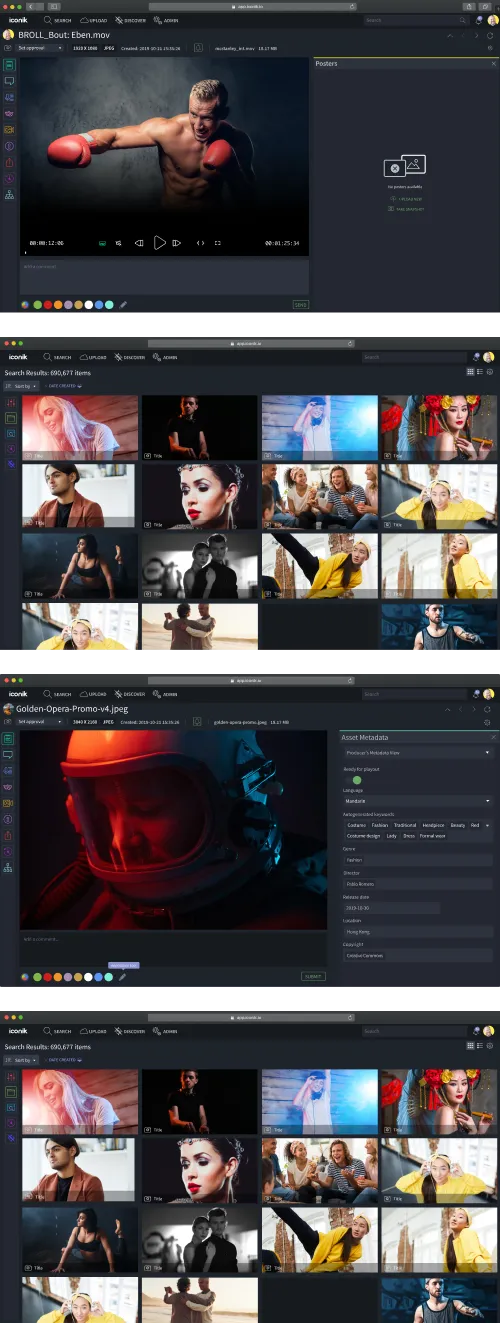

All throughout the seven days, we were getting more and more support tickets about:

- Assets and collections not appearing when searching

- Metadata not being up to date in the list view

- Other similar disturbances.

Since we have graphs showing different metrics from the system we could correlate these issues with spikes in queue lengths in various message queues in the system. We have seen these spikes in the past ever since we introduced bulk actions during 2019. The system is designed to handle spikes like this by auto-scaling the various components that are involved in the processing of search index updates and this has worked well up until now but this time the amount of updates was too large for the system to handle and the issues became visible to end-users.

We had already planned work to separate bulk actions from interactive user actions so that interactive actions always take precedence over background and bulk actions, and when it became clear that this issue was causing user-visible delays we started this work directly.

Towards the end of the week the issue became critical where users were experiencing delays of more than one hour between when an action was performed and the results becoming visible in the system. It also started becoming clear that manual bulk actions could not account for the frequency of the delays, which started occurring several times per day. Because of this, we started digging deeper into our logs to find what was causing this. This took longer than it should have because some information about what the original initiator of a background task gets lost during processing.

Around the same time the changes we started implementing to separate the interactive and bulk actions were ready we were able to identify the actual root cause of the delays. This actually was a cloud scan performed by a customer which produced large amounts of updates in short bursts. These bursts were mistakenly identified as bulk actions on large collection trees causing the incorrect diagnosis. Once we stopped this cloud scan the system quickly started to respond to new operations even though it still took a few hours to process the backlog.

Timeline

January 23 - The first report of user-visible delays came in. We could correlate the reports to large numbers of messages and since the system had already calmed down by the time we investigated our support staff concluded that this was the same kind of issue as earlier and attributed it to bulk actions. The customers who filed the initial tickets reported that the system status was back to normal.

January 25-26 - Over the weekend, our support staff noticed a large amount of asset indexing tasks when monitoring our graphs. They responded by manually scaling up the number of index workers to try to meet the demand. This helped as a temporary measure but this issue was flagged as an urgent one to be brought up in next week’s planning.

January 27 - We decide to bump priority on the already planned work to separate interactive and bulk actions and started work on implementing it immediately.

January 28 - The delays started becoming more frequent than earlier and more customers started noticing. Especially customers who have built custom workflows where rapid delivery of content is essential were affected.

January 29 - The changes in queue handling went into testing in our staging environment.

January 30 - The root cause was identified as a cloud bucket scan against a specific customer bucket. This scan was aborted after communication with the customer and system operation returned to normal. We also deployed the changes to the queue handling since this was something we had already wanted to do before this issue.

Improvements

This section outlines the improvements we have identified to help avoid this kind of issue in the future and to help diagnose other similar issues.

Short term

These are changes we can have a clear understanding of their impact which can be implemented directly or within the next few weeks.

Separate the message queue servers

We currently run the message queue servers on the same physical hardware as many of the other components of the system in our kubernetes cluster. This is good from a resource utilization point of view as it allows the cluster to schedule resources effectively and get the most capacity out of the hardware. The downside of this approach is that we don’t have full control over what runs on the same hardware as the message queue servers, competing for resources, and since the message queue is such a vital part of the system we have decided to move that onto its own set of servers. This makes it easier to monitor and control what resources are available to the message queues.

Monitor and alert how long tasks stay in queues

One metric we currently do not have is how long it takes from a task to get scheduled on a queue until it is executed. By adding this metric for our different queues and types of tasks we will be able to better see what the user experience is like and take action faster. This may also be a metric we can use to inform our auto-scaling algorithm in order to better adjust to increasing demand. Alert on queue length We have metrics for the length of our queues but up until now we have not had any active alerts to notify the support staff if the queues grow above a certain size. This is something we will implement in our monitoring software.

Improve logging

Our logging for background tasks currently does not contain all the information we need to be able to easily correlate an issue like this. We will improve this so we can identify the root-cause for issues like this faster.

Medium Term

These changes are relatively well understood and can be implemented after some additional design work. There are no-medium term improvements identified this time.

Long Term

These are changes that require extensive investigation, either because the impact isn’t well understood or because they will impact the user experience of the service and care must be taken to fit the solution in with existing features.

More separation between customers

With a multi-tenant system like iconik there is always some risk of interference between customers since they share resources. The intention is that resources should be shared fairly between customers so if one customer submits a lot of tasks then that will not prevent other customers’ tasks to get their fair share of the common resources. This strategy is implemented for several parts of the system, including the transcode queue and storage scanning. The update of our search index is one area where this is not the case. This part of the system was designed to rapidly process all index updates in a timely fashion so we had not previously implemented a fair round-robin algorithm here, which we are now going to do. An investigation into how to architect this has already begun.

Future Investigation

We will have to spend more time investigating why this particular scan caused such wide-spread disturbance in the system. We have some suspicions but will need to replicate the setup in a test environment to investigate the issue without affecting the production system.

Conclusions

Timely updates of our search indexes are critical for customers. Since these not only drive searches but also list views and several other parts of the user experience. When the index updates lag behind and users cannot see the result of changes they make in the system they cannot distinguish between the index being up to date and the system losing the update completely. It is therefore important for users’ trust in the system that updates become visible in the user interface fast. It is also important that the actions of one customer don’t affect the processing of another customer and we have to determine additional places where one customer can essentially perform a Denial of Service attack on other customers, either with or without the intention to do so.

Give iconik a try

Schedule a personalized iconik demo with one of our experts and start your free trial today.

Parham Azimi is CEO & Co-Founder of iconik Media. His journey as a software entrepreneur has led hime to found multiple media management companies that serve some of the world’s largest brands. He is a strong advocate for building platforms to allow users to define how they want to work.

Take the MAM Diagnostic Quiz

Need better media management?

Get tailored results to see where your biggest wins with iconik would be.

-Mar-05-2024-07-18-15-7861-PM-Mar-05-2024-09-56-24-4650-PM.png)